Model Context Protocol (MCP) – Integration

Artificial intelligence has made rapid progress in recent years. What is still missing in many organizations, however, is a clean, standardized, and secure way to integrate AI models into existing software, data, and automation landscapes. This is exactly where the Model Context Protocol (MCP) comes into play.

MCP is not another AI framework. It is an open protocol that defines how AI models interact with tools, services, and data sources in a structured and controlled way. Its purpose is to move AI out of isolated proof-of-concepts and turn it into a reliable, productive system component.

The Core Problem with Today’s AI Integrations

In many real-world projects, AI models are:

- tightly coupled to proprietary APIs

- connected through custom, one-off “glue code”

- operated without a clearly defined concept of context or state

This typically results in:

- high maintenance effort

- limited reusability

- poor auditability of data flows

- increased security risks due to uncontrolled tool access

In critical infrastructure, the energy sector, or public transportation, these weaknesses are not acceptable. What is required instead are clear interfaces, defined responsibilities, and controlled execution models.

What MCP Does Differently

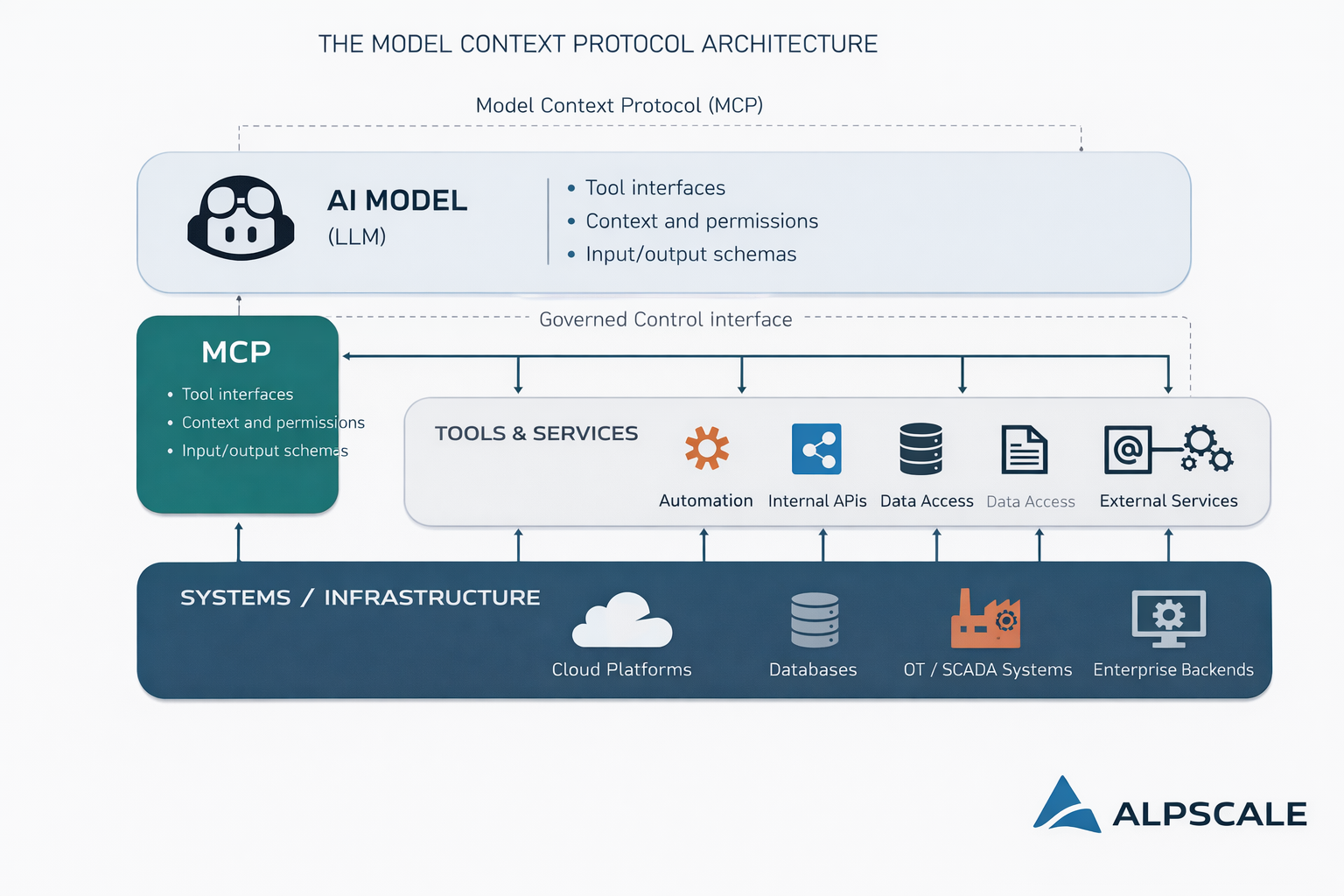

The Model Context Protocol defines a formal interaction layer between:

- AI models

- tools and functions

- data sources

- backend services

It specifies not only what a model is allowed to do, but also how, in which context, and under which constraints it may operate.

Key Concepts of MCP

1. Structured Context MCP provides the model with a well-defined context, including available tools, permitted actions, and relevant metadata. The model does not operate freely, but within a clearly bounded and controllable scope.

2. Declarative Tool Interfaces Functions, APIs, and services are described declaratively instead of being hard-wired. This enables:

- interchangeability

- versioning

- clear ownership and responsibility

3. Separation of AI Logic and System Logic The AI decides what should happen, but it does not execute actions directly. Execution is handled by explicitly defined MCP tools. This significantly improves security, traceability, and testability.

4. Scalability and Reuse Once defined, MCP tools can be reused across multiple models, services, and use cases without additional integration effort.

Why MCP Matters for Industrial and Critical Infrastructure Environments

In security-critical domains, the requirements are fundamentally different from those in consumer applications:

- traceability

- auditability

- access control

- strict separation of responsibilities

MCP directly supports these requirements. It enables AI to be applied exactly where it adds value—without undermining existing security, compliance, and governance models.

When combined with:

- OT-adjacent systems

- SCADA environments

- cloud-based control and management platforms

MCP becomes a key enabler for trustworthy, production-grade AI.

Our Approach at ALPSCALE

At ALPSCALE, we already use MCP in our internal tools and development platforms. This is not driven by experimentation, but by a very pragmatic goal: increasing productivity.

The results are tangible:

- reduced integration effort

- faster development cycles

- clearer system architectures

- a clean separation between AI, domain logic, and infrastructure

Engineers can focus again on what truly matters: solving problems instead of fixing integrations.

MCP helps us treat AI as a first-class system component, not a special case.

Conclusion: MCP Is Architecture, Not a Trend

The Model Context Protocol is not a short-lived hype. It is a logical response to the growing complexity of AI-enabled systems.

Anyone who wants to deploy AI in a way that is:

- scalable

- secure

- maintainable

- and suitable for industrial environments

will inevitably need clear protocols and standards.

For us, MCP is a core building block of that future—and another step toward system integration, rethought.